AI Test User is a tool that makes the complicated end-to-end user journeys easy to test. This post covers the motivation for the project and what makes it an interesting tool for testing.

Setting The Scene

Here are some example user journeys:

- Purchase Journey: Browse → Product Page → Add to Cart → Checkout → Purchase

- Upgrade Path: Login → Dashboard → Settings → Upgrade Plan

- New User Signup: Landing Page → Register → Email Received → First Login Complete

These journeys anchor the whole approach for AI Test User. Instead of focussing on traditional lower-level testing (unit/integration/functional), the new AI tooling means we can test from the user’s perspective, aiming to bring automation to end-to-end testing and acceptance testing. AI Test User is not trying to answer “does component X render?”, instead it’s answering “can the user receive the login email, login OK, then complete task Y?”.

The Origin Story

The project is a collaboration with jes. We were working in a shared office a few weeks back when I was explaining (complaining?) how a particularly gnarly bug had been missed and how hard it would have been to fully find and fix ahead of a real user coming across it. A discussion followed.

We are both bullish on AI in general. We have also had enough experience around many real-world software systems to know how hard (and rare) writing bug-free code is.

The outcome was a proof of concept to show that if you give AI a few extra tools (like per-session email and MFA capability) plus extra scaffolding, then it would have been possible to catch that particularly awkward bug in an automated fashion ahead of a user.

This seemed very useful to us, and the sort of thing we think others might find useful as well. AI Test User is the continuation of that first PoC.

What does AI Test User actually do?

The user provides a prompt describing the high level test, plus any credentials or other details the AI Test User might need. Our service then uses an AI with our extra tooling to drive a browser, calling out to other tools and integrations as required. It reports any bugs that are found.

If you’re interested in more of the technical stuff, there is a bit of under-the-hood explanation on jes’s blog.

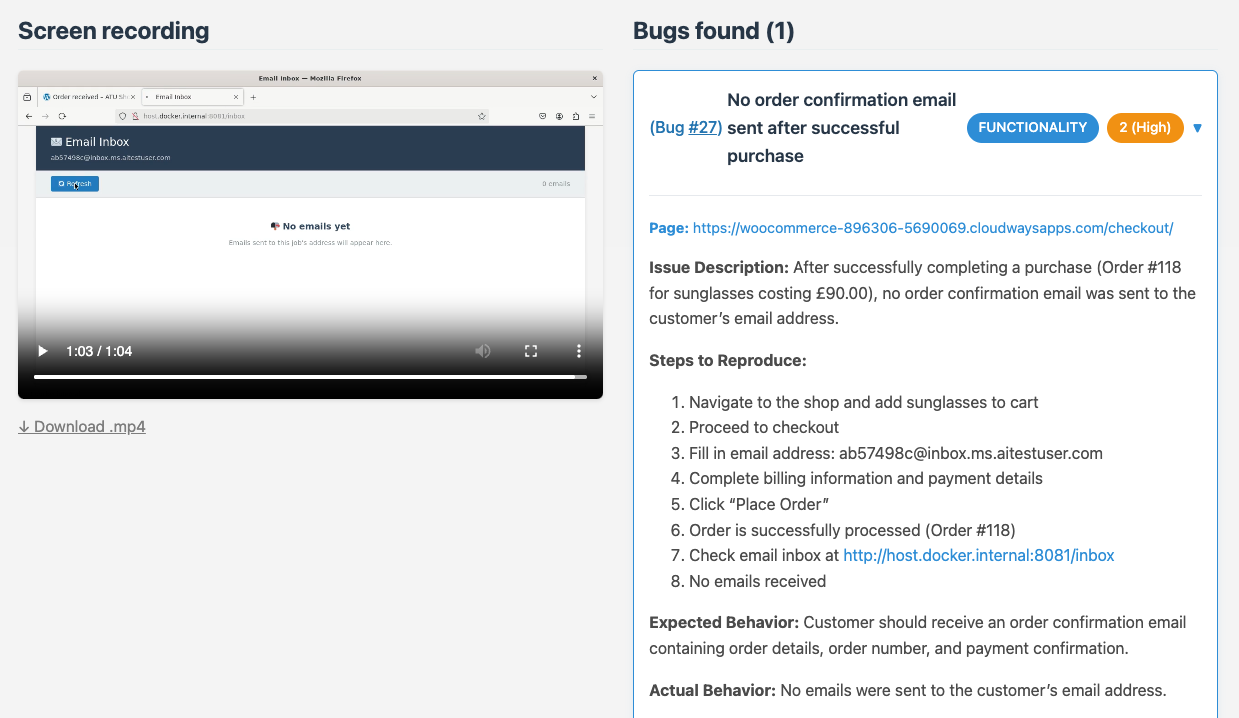

It’s perhaps made clearer with an example. Let’s say we have an e-commerce website. We want to ensure that users can add items to their cart, place an order and have it processed successfully, including the user receiving an email confirmation for their order.

We prompted AI Test User with a starting URL plus these instructions:

Purchase a pair of sunglasses from this store. When prompted for a payment card use: 4242 4242 4242 4242. Enter the card number in the Dashboard or in any payment form. Use a valid future date, such as 12/34. Use any three-digit CVC For address use 10 Downing St, London SW1A 2AA

It then completed the test and reported any bugs. Full report and video can be seen here.

Real issue: this shop isn't sending confirmation emails!

Note that the bug discovered was real and unintentional. The order confirmation email was never received, and this was indeed a real bug on the example store used.

Can developers test like this already?

A developer could scaffold up this test with Playwright or similar, but they’d have to put in a lot more effort than just the prompt above. Both for the initial build but also to maintain it (what if one of the key pages is split up, or buttons renamed, classes changed etc). That doesn’t even start to consider the effort to interact with the external elements of email interaction or logging in with real user credentials via a third-party auth provider.

Two other examples that show how flexible this type of testing can be:

- Order a roast dinner from the Sainsbury’s website. This hit a real error when the search function failed, but shows how the testing successfully interacted with email to get started and continues with the run despite an upstream error.

- Complete the Microsoft Azure setup wizard. No bugs on this one but the tool did use a full user login with MFA. It completed the entire wizard, all without any (historically fragile) configuration for which buttons to click when.

What’s Coming Up

The initial aim is to find out whether other teams and developers would find this useful. Anyone can head over to AI Test User to try it out themselves without any cost.

Beyond that, there are lots of product features and technical innovations we’d like to work on. I started typing out a list here but it quickly became large and messy. If you think there’s a feature missing that blocks adoption then get in touch - we might be able to prioritise building it.

If you don’t want to try a demo but do want to chat more about the tech or how it might integrate with your software then you can reach out either at [email protected] or direct to [email protected]